How to Prepare For New AI Regulations

Until recently, the regulation of AI was left up to the organizations developing the technology, allowing these organizations to apply their own judgment and ethical guidelines to the products and services they create.

Although this is still widely true, it may be about to change. New regulations are on the horizon, and some already signed into law, mandating requirements that could mean costly fines for non-compliance.

In this post, we look at some themes across this legislation and give you some tips to begin your preparation.

- AI Regulatory Landscape

- Common Themes in AI Regulations

- How Can Companies Prepare for AI Compliance?

- Get in Touch

For a broader approach and ideas about what can be done in practice, visit a summary of the AI security and strategy services we offer.

Table of contents

AI Regulatory Landscape

When you think of regulations surrounding AI, your mind probably wanders to the use of the technology in things like weapons systems or public safety. The fact of the matter is, potential harm from these systems extend far beyond these narrow categories.

Many developers are just using the tools available to them. Developers create experiments and evaluate the final result on a simple set of metrics and shipping to production if it meets a threshold. They aren’t thinking specifically about issues related to risk, safety, and security.

AI systems can be unpredictable, which is ironic since often you are using them to predict something. Why unpredictability surfaces is beyond the scope of this post, but it has to do with both technical and human factors.

We’ve had laws indirectly relating to regulations of AI for quite some time and probably haven’t realized it. Not all these regulations specifically spell out AI. They may be part of other consumer safety legislation.

For example, the Fair Credit Reporting Act may come into play when making automated decisions about creditworthiness and dictate the data used. In the context of machine learning, this applies to the data used to train a system.

So, if any current regulation prohibits specific pieces of information from being used, such as protected classifications (race, gender, religion, etc.), or prohibits specific practices, then it would also apply to AI, whether it spells it out directly or not.

Governments and elected officials are waking up to the dangers posed by the harm resulting from the unpredictability of AI systems. One early indicator of this is in GDPR Recital 71.

In summary, GDPR Recital 71 is the right to explanation. If there is an automated process for determining whether someone gets a loan or not, a person denied has a right to be told why they were rejected. Hint, telling someone one of the neurons in your neural network found them unworthy isn’t an acceptable explanation.

Recently, the EU released a proposal specifically targeting AI systems and system development. This proposed legislation outlines requirements for high-risk systems as well as prohibitions on specific technologies, such as those meant to influence mood as well as ones that create grades like a social score.

Although the US tried to pass similar legislation called the Algorithmic Accountability Act, it did not pass. The US Government did, however, release a draft memo on the regulation of AI. This document covers the evaluation of risks as well as issues specific to safety and security.

The US legislation not passing doesn’t mean the individual US States aren’t taking action on this issue. One example of this is Virginia’s Consumer Data Protection Act.

This is far from an exhaustive list, and one thing is certain. More pieces of regulation are coming, and organizations need to prepare. In the short term, these regulations will continue to lack cohesion and focus and will be hard to navigate.

Read more from Nathan on our blog: “Bridging the AI Security Divide” >>

Common Themes in AI Regulations

Even though the specifics of these regulations vary across the geographic regions, some high-level themes tie them together.

Responsibility

The overarching goal of regulation is to inform and hold accountable. These new regulations push the responsibility for these systems onto the creators. Acting irresponsibly or unethically will cost you.

Scope

Each regulation has a scope and doesn’t apply universally to all applications across the board. Some have a broad scope, and some are very narrow. They can also lack common definitions making it hard to determine if your application is in scope or not. Regulations may specifically call out a use case or may imply it through a definition of data protection.

Most lawmakers aren’t technologists, so expect differences across the various legislation you encounter and determine common themes.

Risk Assessments and Mitigations

A major theme of all the proposed legislation is understanding risk and providing mitigations. This assessment should evaluate risks both to and from the system. None of the regulations dictate a specific approach or methodology, but you’ll have to show that you evaluated risks and what steps you took to mitigate those risks. So, in simple terms, how would your system cause harm if it is compromised or fails, and what did you do about it?

Validation

Rules aren’t much good without validation. You’ll have to provide proof of the steps you took to protect your systems. In some cases, this may mean algorithmic verification by providing ongoing testing. The output of the testing could be proof you show to the auditor.

Explainability

Simply put, why did your system make the decision it did? What factors lead to the decision?

AI explainability also plays a role outside of regulation. Coming up with the right decision isn’t good enough. When your systems lack explainability, they may make the right decision but for the wrong reason. Based on issues with data, the system may “learn” a feature that has high importance but, in reality, isn’t relevant.

How Can Companies Prepare for AI Compliance?

The time to start preparing is now, and you can use the themes of current and proposed regulation as a starting point. It will take some time, depending on your organization and the processes and culture currently in place.

AI Strategy and Governance

A key foundation in compliance is the implementation of a strategy and governance program tailored to AI. An AI strategy and governance program allows organizations to implement specific processes and controls and audit compliance.

This program will affect multiple stakeholders, so it shouldn’t be any single person’s sole responsibility. Assemble a collection of stakeholders into an AI governance working group and, at a minimum, include members from the business, development, and security team.

Inventory

You can’t prepare or protect what you don’t know. Taking and maintaining a proper inventory of AI projects and their criticality levels to the business is a vital first step. Business criticality levels can feed into other phases, such as risk assessments. A byproduct of the inventory is that you communicate with the teams developing these systems and gain feedback for your AI strategy.

Implement Threat and Risk Assessments

A central theme across all of the new regulations is the specific calling out of risk assessments. Implementing an approach where you evaluate both threats and risks will give you a better picture of the protection mechanisms necessary to protect the system and mitigate potential risks and abuses.

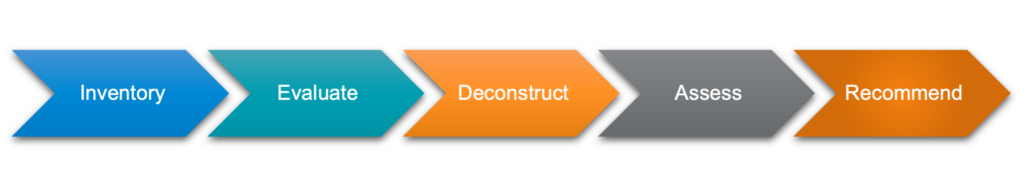

At Kudelski Security we have a simple approach for evaluating threats and risks to AI systems consisting of five phases. This approach provides tactical feedback to stakeholders for quick mitigation.

KS AI Threat and Risk Assessment

If you are looking for a quick gut check on the risk of the system, ask a couple of questions.

- What does the system do?

- Does it support a critical business process?

- Was it trained on sensitive data?

- How exposed is the system going to be?

- What would happen if the system failed?

- Could the system be misused?

- Does it fall under any regulatory compliance?

For a deeper dive on assessing AI risk, check out our webcast with Black Hat “Preventing Random Forest Fires: AI Risk and Security First Steps”

Develop AI-Specific Testing

Testing and validation of systems implementing machine learning and deep learning technology require different approaches and tooling. An AI system combines traditional and non-traditional platforms, meaning that standard security tooling won’t be effective across the board. However, depending on your current tooling and environment, standard tooling could be a solid foundation.

Security testing for these systems should be more cooperative than some of the more traditional adversarial approaches. Testing should include working with developers to get more visibility and creating a security pipeline to test attacks and potential mitigations.

It may be better to think of security testing in the context of AI more as a series of experiments than as a one-off testing activity. Experiments from both testing and proposed protection mechanisms can be done alongside the regular development pipeline and integrated later. AI attack and defense is a rapidly evolving space, so having a separate area to experiment apart from the production pipeline ensures that experimentation can happen freely without affecting production.

Documentation

Models aren’t useful on their own. They require supporting infrastructure and may be distributed across many devices. This distribution is why documentation is critical. Understanding data usage and how all of the components work together allows for a better determination of the threats and risks to your systems.

Focus on AI Explainability

Explainability, although not always called out in the legislation, is implied. After all, you can’t tell someone why they were denied a loan if you don’t have an explanation from the system.

Explainability is important in a governance context as well. Ensuring you are making the right decision for the right reasons is vital for the normal operation of a system.

Some models are more explainable than others. When performing benchmarking for model performance, it’s a good idea to benchmark the model against a simpler, more explainable model. The performance may not be that different and what you get in return is something more predictable and explainable.

Get in Touch

Move fast and break things is a luxury you can afford when the cost of failure is low. More and more machine learning is making its way into high-risk systems. By implementing a strategy and governance program and AI-specific controls, you can reduce your risk and attack surface and comply with regulations. If you’re looking for assistance with your AI security strategy, get in touch with our team of experts here.